Texture Space Translucency in Commercial Engines

Real time Texture Space Translucency in Unity as research

Seeing Translucency implemented in a commercially available engine

was the goal of this research. As well as the performance of the

implementation.

While creating a demo showing the capabilities of the

techniques, that is accesable enough where the user can easily

try and adjust settings for himself.

The demo was made in Unity.

Translucency physics

Let's have a quick and basic rundown surrounding translucency

so as to understand what it is and does. Making it easier to

understand what was accomplished with this project.

Translucency is similar to transparancy as both phenomena are

the result of light particles moving through a medium. In

transparant objects like glass, the light can basically move

in a single straight line. But for transparant object/materials

the light gets scattered below the surface, causing it to give

of what can be discribed as a "shine". Which can be seen in the

image to the right.

A way to simulate these lightrays going through our

object/materials is needed. That way an approximation can be

made using some good old math, the amount of light coming out

of a position and thus the coloration of the material. The

technique used is shown in the presentation by

SEED - EA

, slide: 55 - 63.

Now with that introduction behind us lets delve into the project!

Baking

Engines don’t often come with a full and adjustable baking

implementation made. So, making a simple and robust method to

do it might be needed. A good example of this in the Unity engine

is from Snelha Belkhale.

In which an orthographic camera and some custom shaders are used

to render data onto a target and then save that target to a proper

image.

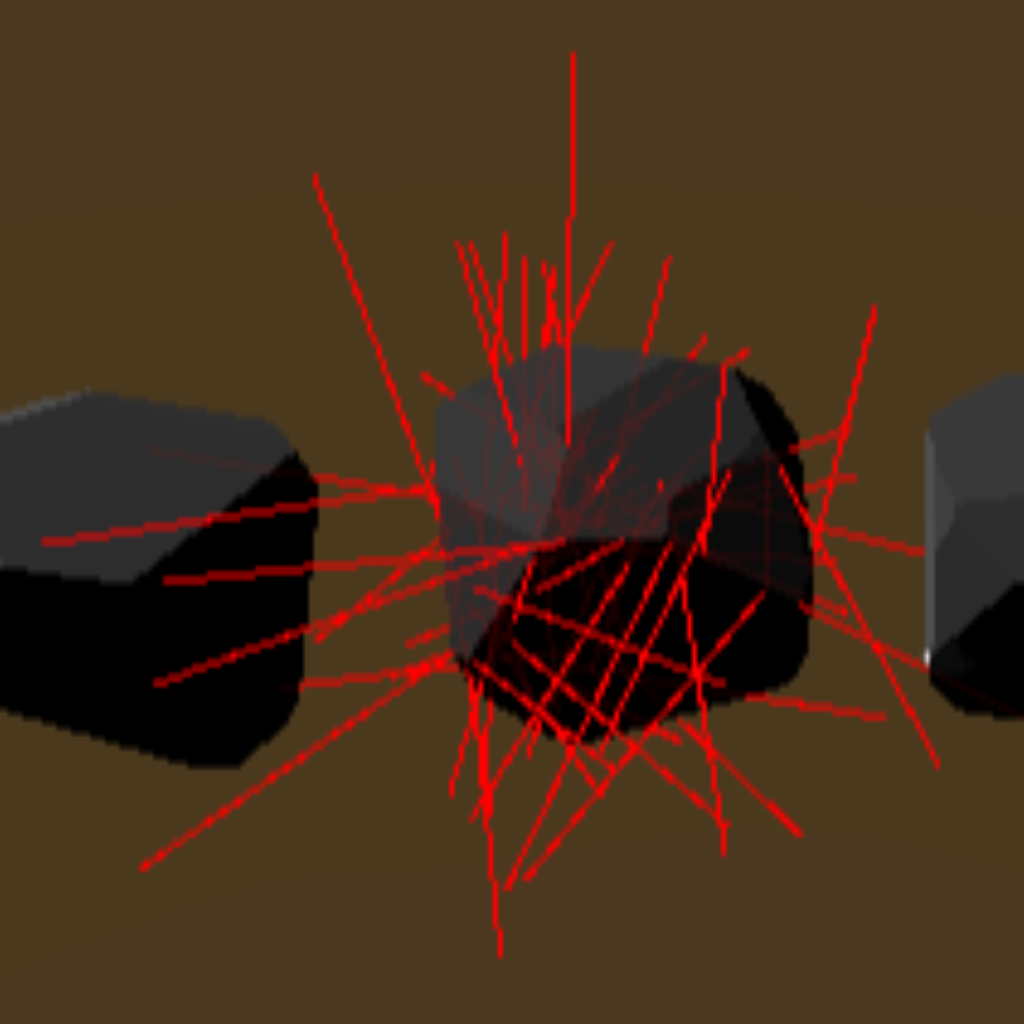

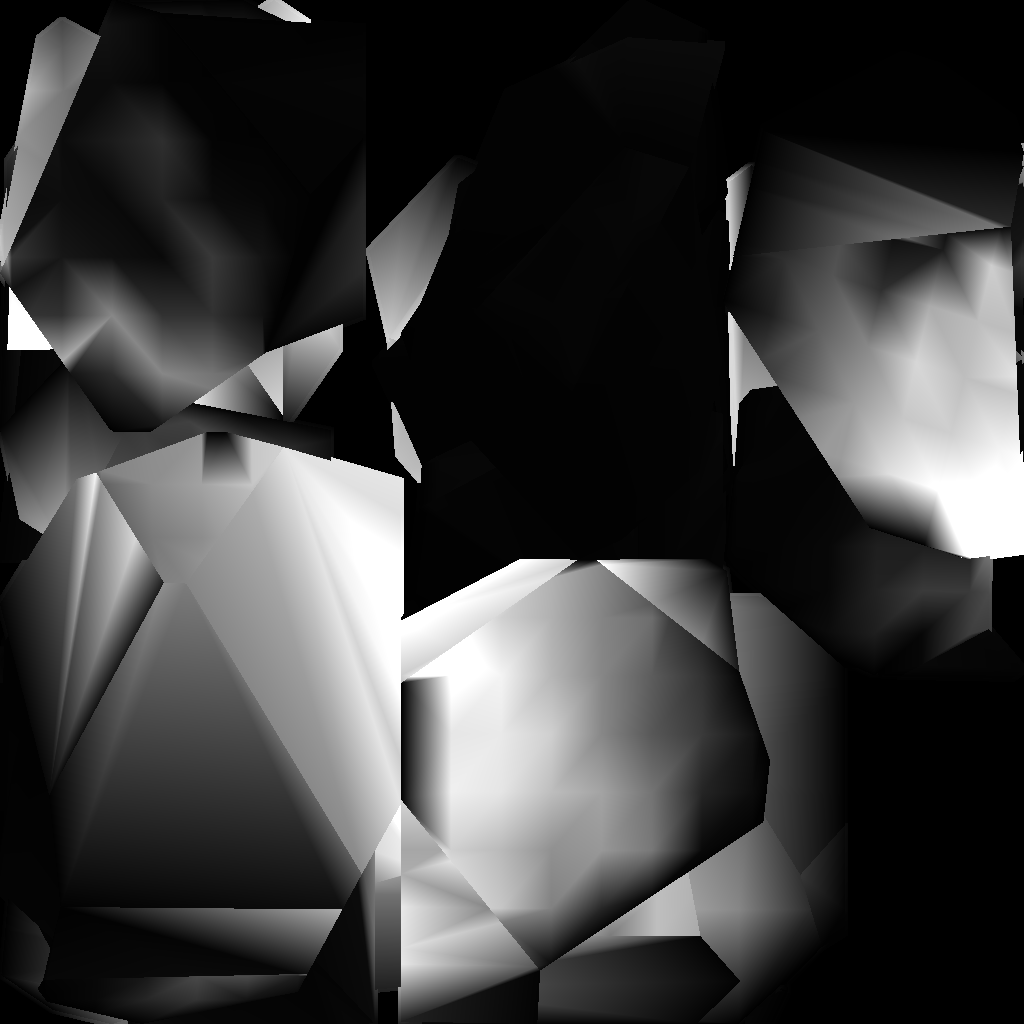

Some progress pictures are visible below, with the right most being

the final result:

Compute shader

The translucency calculations need some parameters to function correctly. In particular the avarage depth of an object at each position (in our case each vertex), which can normally be achieved through raytracign capable hardware in real time. Unfortunately for me my hardware is not RTX capable, so an alternative was needed. Easy enough! In the raytraced solution mentioned in the SEED presentation the gathered data is stored into a texture. So we can do this as well beforehand and just keep using the same data. This way the hardware requirements are cirumvented altough perhaps at a loss of some visual fidelity.

Credit: SEED - EA

The following steps will need to be executed. Our calculations shall be done per vertex

of the mesh, so a high-poly mesh is preferred to carry across more details. To get the

starting position for the ray, the vertex position must be added to the unit vector of

the inversed vertex normal, as shown on figure above the steps of the sampling method.

From here a random direction may be chosen for the ray direction. It was suggested in

the research of Barré-Brisebois,

that an importance-sample phase function would be used for this. But this goes

beyond the scope of this research. Rather a noise texture will be used to sample a

normal from.

Having both the origin of the ray as well as the direction, a ray-cast can be made on

the polygons of the mesh. From this the distance to the intersection point is kept and

if there is more than 1 sample per vertex, it can average out the result. This way,

a smoother and more accurate value can be achieved. The depth value can then be stored

in the vertex color to be baked out in a texture later.

Rendering

Finally the rendering, placing a material that uses a shader with the Beer-Lambert

calculation. As mentioned before possible idea was to have the precomputed step in

the vertex shader. Yet due to hardware limitations and time constraints, I was

unable to test this claim.

The fear of it simply being too expensive to render even 1 sample per frame on my current

hardware is a concern. On heavier system capable of RTX-raytracing and the associated

shaders in Unity this the issue would most likely not be there. Yet that is speculation

as the implementation was run on a system with a GTX 1050ti. All this very much

exemplifying the reason data is precomputed, especially for older systems.

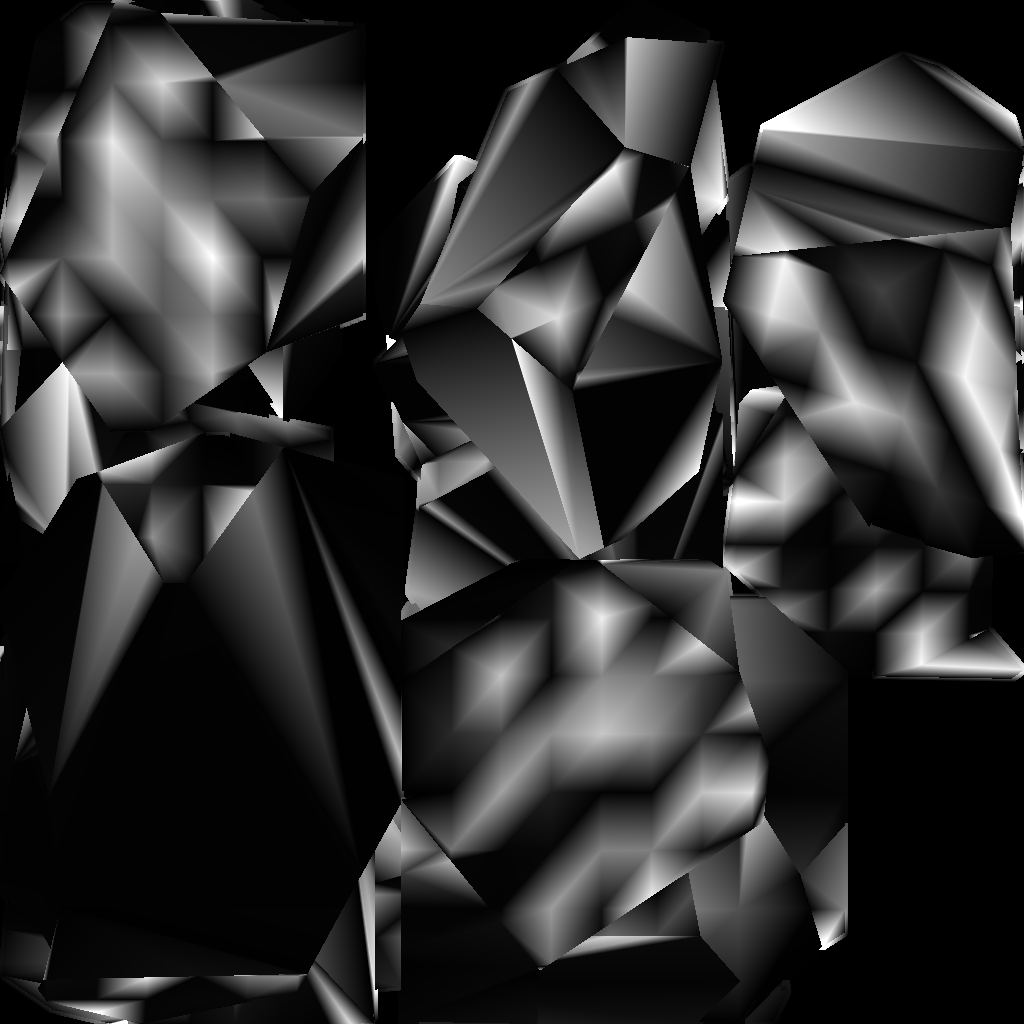

Here you can see some of the final result: